Just one day after OpenAI revealed GPT-4o, which it bills as being able to understand what’s taking place in a video feed and converse about it, Google announced Project Astra, a research prototype that features similar video comprehension capabilities. It was announced by Google DeepMind CEO Demis Hassabis on Tuesday at the Google I/O conference keynote in Mountain View, California.

Hassabis called Astra “a universal agent helpful in everyday life.” During a demonstration, the research model showcased its capabilities by identifying sound-producing objects, providing creative alliterations, explaining code on a monitor, and locating misplaced items. The AI assistant also exhibited its potential in wearable devices, such as smart glasses, where it could analyze diagrams, suggest improvements, and generate witty responses to visual prompts.

Google says that Astra uses the camera and microphone on a user’s device to provide assistance in everyday life. By continuously processing and encoding video frames and speech input, Astra creates a timeline of events and caches the information for quick recall. The company says that this enables the AI to identify objects, answer questions, and remember things it has seen that are no longer in the camera’s frame.

Project Astra: Google’s vision for the future of AI assistants.

While Project Astra remains an early-stage feature with no specific launch plans, Google has hinted that some of these capabilities may be integrated into products like the Gemini app later this year (in a feature called “Gemini Live”), marking a significant step forward in the development of helpful AI assistants. It’s a stab at creating an agent with “agency” that can “think ahead, reason and plan on your behalf,” in the words of Google CEO Sundar Pichai.

Elsewhere in Google AI: 2 million tokens

During Google I/O, the company unveiled a large number of AI-related announcements, some of which we may cover in separate posts in the future. But for now, here’s a quick overview.

At the top of the keynote, Pichai mentioned an “improved” version of February’s Gemini 1.5 Pro (same version number, oddly) that is coming soon. It will feature a 2 million-token context window, which means it can process large numbers of documents or long stretches of encoded videos at once. Tokens are fragments of data that AI language models use to process information, and the context window determines the maximum number of tokens an AI model can process at once. Currently, 1.5 Pro tops out at 1 million tokens (OpenAI’s GPT-4 Turbo has a 128,000 token window for comparison).

We asked AI researcher Simon Willison—who does not work for Google but was featured in a promo video during the keynote—what he thought of the context window announcement. “Two million tokens is exciting,” he replied via text while sitting in the keynote audience. “But it’s worth keeping price in mind that $7 per million tokens means a single prompt could cost you $14!” Google charges $7 per million input tokens for 1.5 on prompts longer than 150,000 tokens through its API.

Speaking of tokens, Google announced that its previously announced 1 million token context window for Gemini 1.5 Pro is finally coming to Gemini Advanced subscribers. Previously, it was only available in the API.

Google also announced a new AI model called Gemini 1.5 Flash, which it billed as a lightweight, faster, and less expensive version of Gemini 1.5. “1.5 Flash is the newest addition to the Gemini model family and the fastest Gemini model served in the API. It’s optimized for high-volume, high-frequency tasks at scale,” says Google.

Willison had a comment on Flash as well: “The new Gemini Flash model is promising there, it’s meant to provide up to 2m tokens at a lower price.” Flash costs $0.35 per million tokens on prompts up to 128,000 tokens and $0.70 per million tokens for prompts longer than 128,000. It’s one-tenth the price of 1.5 Pro.

“35 cents per million tokens! That’s the biggest news of the day, IMO,” Willison told us.

Google also announced Gems, which appears to be its take on OpenAI’s GPTs. Gems are custom roles for the Google Gemini chatbot that will play a part that you define, allowing you to personalize Gemini in different ways. Google lists examples of potential Gems as “a gym buddy, sous chef, coding partner or creative writing guide.”

New generative AI models

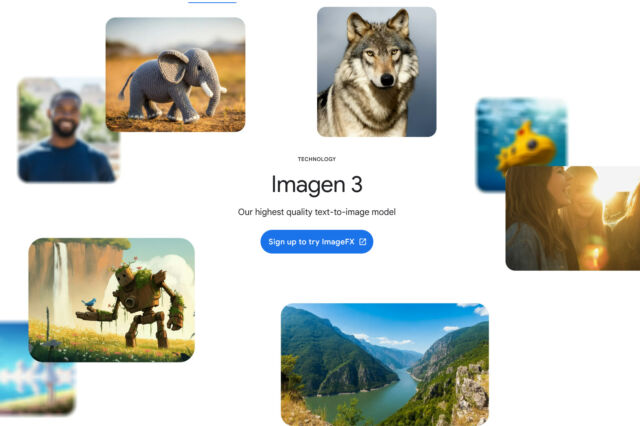

Also at the Google I/O keynote on Tuesday, Google announced several new generative AI models for creating images, audio, and video. Imagen 3 is the latest in its line of image synthesis models, which Google says is its “highest quality text-to-image model, capable of generating images with even better detail, richer lighting and fewer distracting artifacts than our previous models.”

Google also showed off its Music AI Sandbox, which Google bills as “a suite of AI tools to transform how music can be created.” It combines its YouTube music project with its Lyria AI music generator into tools for musicians.

The company also announced Google Veo, which is a text-to-video generator that creates 1080P videos from prompts in a quality that seems to match OpenAI’s Sora. Google says it is working with actor Donald Glover to create an AI-generated demonstration film that will debut soon. It’s far from Google’s first AI video generator, but it seems to be its most capable so far.

The sample video above, provided by Google, used the prompt, “A lone cowboy rides his horse across an open plain at beautiful sunset, soft light, warm colors.”

Google says starting today, its new AI creative tools are available to select creators in a private preview only but that wait lists are open.